Imagine you stumble upon an article that you absolutely love. It’s insightful, well-written, and now you’re craving more—more articles on similar topics, more pieces that build on the ideas you just read.

But sometimes, it goes the other way. Maybe the article is filled with jargon, references, and concepts you don't quite get. Now, you need to take a step back and find some foundational articles that can fill in the gaps and give you the background knowledge needed to really understand what you just read.

I'm not just talking about getting recommendations for your next great read. I'm talking about creating a whole map—a graph you can navigate logically to build up your foundation and explore the topics you love. This way, learning becomes more intuitive and a little less of a pain in the ass.

The internet is a vast place with more content than we could ever read in a lifetime. But finding exactly what you need often feels like searching for a needle in a haystack. That’s because recommendations—showing the right person the right content at the right time—is a problem engineers have been trying to solve for years. We have some tools to help, like curated educational roadmaps, but those are made by hand and can't possibly cover the endless sea of content available online.

From my experience with fine-tuning open-source Large Language Models (LLMs) for specific tasks, I’ve seen how powerful these models can be in adapting to different contexts and summarizing content. For a project like this, using fine-tuned LLMs could really help create smarter, more dynamic knowledge graphs—maps that show you what to read next, whether you want to dive deeper or start with the basics.

But let’s stop talking about what could be and look at what actually happened when we tried to make this a reality.

The Idea

Let me give you some context: this project is an added feature to TextData, a tool developed at the University of Illinois Urbana-Champaign's TIMAN Lab. TextData helps students find the right information exactly when they need it by bookmarking helpful webpages for specific lectures. The content typically focuses on technical concepts in areas like Natural Language Processing, Machine Learning, and Information Retrieval.

To get started with this project, we need five key components:

- Fine-tuned LLM for Summarizing Webpage Content: We use a fine-tuned FLAN-T5 XL model to generate concise summaries or descriptions of webpage content, ensuring that users quickly understand the main points without losing critical information.

- Fine-tuned LLM for Predicting Edge Categories: We employ a fine-tuned LLaMA 2 7B model to determine the relationship between pairs of webpages. This model labels the edges connecting webpage descriptions, categorizing them based on predefined criteria like "prerequisite," "follows," or "related."

- Backend for Creating the Graph: A backend system is essential to dynamically generate the graph based on the relationships identified by the models. This system handles data processing and ensures the graph structure is correctly maintained.

- Frontend for Displaying the Traversable Graph: The frontend interface visualizes the graph, allowing users to explore the content connections interactively. This UI needs to be intuitive and responsive, making it easy for users to navigate through various articles and understand their relationships.

- Database for Storing the Graph: A robust database is required to store the graph’s nodes (webpage summaries) and edges (relationships). This ensures that data is persistent and can be queried efficiently for fast retrieval and updates.

This project uses FLAN-T5 XL for webpage summarization and LLaMA 2 7B for labeling edges between pair of webpages in predefined categories. Let's see why do we need summarization, how and why we categorize in them in these categories.

The Why

Categories

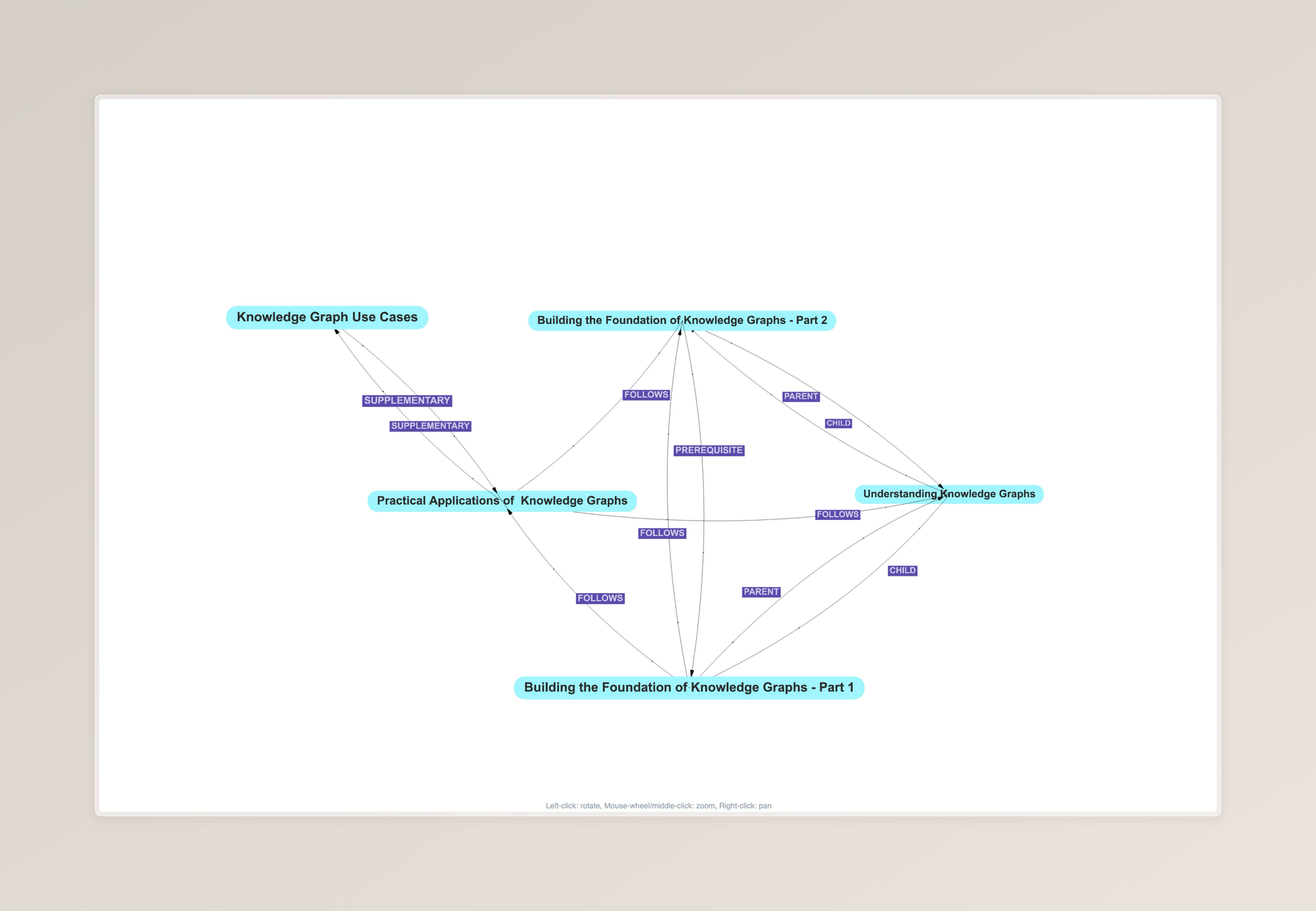

Let's consider two webpages A and B. For an edge going from A to B it can be labeled into these 6 categories.

PREREQUISITE: If B is required for understanding A.- A: Building the Foundation of Knowledge Graphs - Part 2

- B: Building the Foundation of Knowledge Graphs - Part 1

PARENT: If B contains A. i.e A is child of A.- A: Building the Foundation of Knowledge Graphs - Part 1

- B: Understanding Knowledge Graphs

FOLLOWS: If B logically follows A i.e A is prerequisite of B.- A: Building the Foundation of Knowledge Graphs - Part 1

- B: Building the Foundation of Knowledge Graphs - Part 2

CHILD: If A contains B. i.e A is parent of A.- A: Understanding Knowledge Graphs

- B: Building the Foundation of Knowledge Graphs - Part 1

SUPPLEMENTARY: Neither A or B follow each other but can be read in any order.- A: Practical Applications of Knowledge Graphs

- B: Knowledge Graph Use Cases

NO RELATION: A and B have no relation at all.- Understanding Knowledge Graphs

- History of America

Notice, because of the directional nature of the edge labels there can be two edges between two node. For instance, A is a PARENT of B and B is a CHILD of A.

Summarization

To create concise summaries of webpages without losing their core essence, we leveraged near state-of-the-art models like FLAN-T5 and LLAMA 2. We chose FLAN-T5 because it's smaller and faster, providing comparable summaries without much computational overhead.

In practice, summarizing webpages requires a few preprocessing steps: scraping the content, cleaning the text by removing unnecessary elements, and preparing it for the model. This preprocessing is a standard engineering task and well-solved, so it doesn't require much focus here.

The How

Dataset

The first challenge in training this model was the dataset itself—there wasn’t an existing dataset that labeled the relationships between webpages specifically from a learning perspective. To address this, we initially created a dataset manually, selecting webpages from the domains of Natural Language Processing and Information Retrieval. We then augmented this dataset using ChatGPT-4, which helped generate additional connections, including reverse edges between nodes to reflect bidirectional learning paths.

This approach resulted in a dataset of around 6.5K entries, which expanded to about 11K when including the reversed edges. We then split this dataset into training, validation, and testing subsets to fine-tune and evaluate the model effectively.

Finetuning

We fine-tuned LLAMA-2 7B for this project, running it for up to 50 epochs with early stopping. This means the training was set to stop once the model's performance stopped improving, preventing overfitting. To make the model fit on a 64GB GPU, we used 4-bit quantization, which reduces the model size by lowering the precision of its weights, allowing it to run efficiently on our hardware while maintaining performance.

Visualization

The Results

Understanding Nuances

The model struggles with nuanced differences between similar categories, like distinguishing "parent" from "prerequisite" and "child" from "follows." It doesn't quite get it. When we simplify these categories by removing the confusing distinctions, the model's accuracy improves significantly. This suggests that, from a linguistic standpoint, the model is treating these terms as synonyms and failing to grasp their hierarchical relationships.

Cross-Domain Categoriazation

The model struggles with nuanced differences between similar categories, like distinguishing "parent" from "prerequisite" and "child" from "follows." It doesn't quite get it. When we simplify these categories by removing the confusing distinctions, the model's accuracy improves significantly. This suggests that, from a linguistic standpoint, the model is treating these terms as synonyms and failing to grasp their hierarchical relationships.

Better UI/UX

Running the model over the entire dataset makes it nearly impossible to pinpoint the specific articles a user needs. Even though the data itself might be accurate, the graph becomes too complex to navigate. However, if we narrow down the graph to a smaller subsection, focusing particularly on the article the user just read, the visualization becomes much more manageable and easier to navigate.